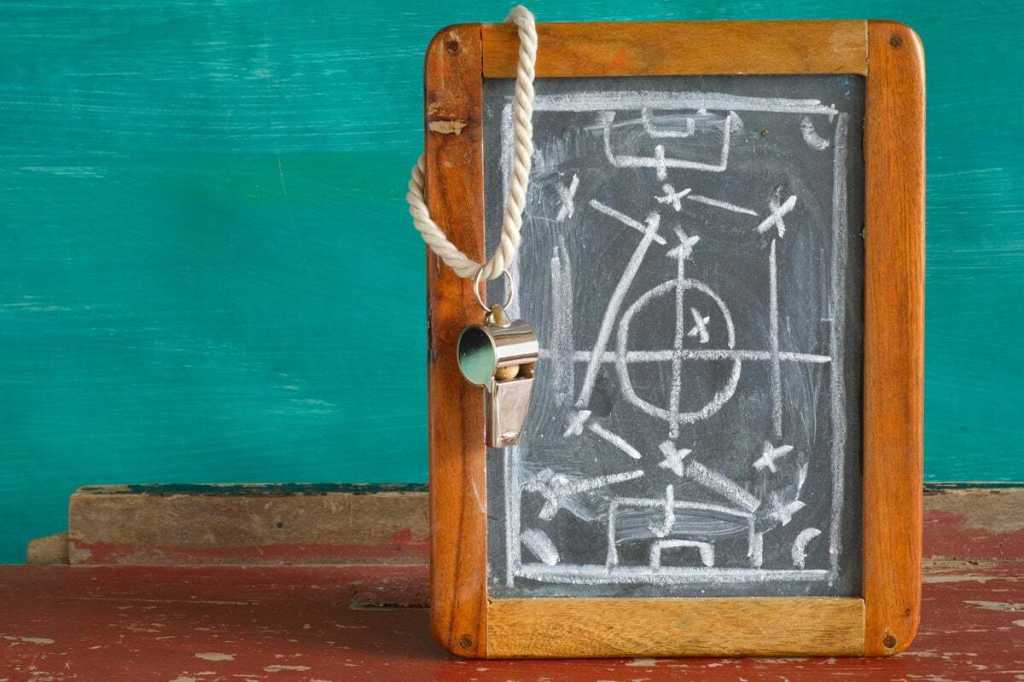

Forget the shiny demos — this 90-day AI playbook proves results come from fixing real pain points, not chasing the next big tool.

This is the playbook I wish I had on day one. It is cross-industry by design, written for leaders who need fast outcomes and governance that scales.

I did not become an AI champion by chasing shiny demos. I became one after a meeting where a director asked a simple question: “When will we see value?” We had a spreadsheet full of AI vendors, calendar holds for product pitches and threads praising favorite tools. We had enthusiasm for solutions. We did not have a shared problem. The room went quiet. I promised 90 days.

Those 90 days were when I jumped into the now tried-and-true model I follow. First, we stopped shopping. Instead, we focused on the work: where time was bleeding, where quality slipped, where customers waited. We made a small portfolio visible, set guardrails and funded work like a product, not a science fair. It worked because we made progress every week, getting closer to solutions the organization could actually use as we iterated, and we shut down what stalled. By day 90, we had fewer idle pilots, a clean story for the board and business owners asking for the next tranche.

The 90-day operating model I now run

I start with a narrow bet: three to five generative AI use cases that already have a queue of pain inside the organization. Instead of building from scratch, we look first at hosted solutions and out-of-the-box tools that can be configured quickly. Operations might test tools that speed up reporting, HR might trial résumé screeners, finance could use invoice summarizers and sales could pilot proposal generators. The point is not the tool itself but how fast a department can prove a tool’s usefulness in its real workflows.

Week 1

Week 1 is always a listening tour. I sit with people doing the work and timing the steps. I capture not only how long tasks take but where frustrations show up: systems that do not talk to each other, redundant data entry, handoffs that stall. I ask three simple questions: What slows you down? What do you do twice? What would you stop doing if you could?

Every answer gets logged in a shared workbook. For each process, we document:

- Task description. The exact work being done, written in plain language.

- Baseline measures. Minutes or hours required, error rates or backlog counts.

- Pain points. Friction described by the users themselves, not just my observations.

- Data sources. Where inputs come from, who owns them and how often they are updated.

- Criticality. Whether the task affects revenue, compliance, customer commitments or internal efficiency.

- Owner. The department lead or supervisor responsible for outcomes.

By the end of the week, each use case has a one-pager that brings this all together: the problem in plain words, the baseline data to show where we are starting, the target outcome stated as a number we can measure and a named business owner who commits to shepherding the work.

Week 2

Week 2 is about constraints. Data is classified at the source. Prompts and outputs are logged. Anything affecting financial or operational outcomes keeps a human in the loop. Hosted solutions make the shortlist only if they offer exportable data, transparent pricing and basic security assurances.

Weeks 3 through 9

Weeks 3 through 9 follow a strict cadence:

- Monday is a 20-minute portfolio standup where each owner reports one number: hours saved or errors avoided last week. If the number is zero for two consecutive weeks, we stop the work.

- Midweek, teams configure or tune their tools, adjust prompts, test connectors and trial add-ons.

- Fridays are for users. We sit with them, watch the tool in action and capture where it helps and where it gets in the way.

Prioritization stays plain and practical. We score the backlog by reach, impact, confidence and effort. We drop anything that requires new data we cannot legally or ethically obtain. We prefer steps that remove manual work over flashy features that add complexity. Platform development only enters the picture when hosted tools cannot deliver.

Funding follows the work, not the hype. I divide the budget into three segments: foundations (identity, data access, observability, policy enforcement); short trials with out-of-the-box tools, capped at four weeks; and scale-ups built on top of those tools once business owners prove their value. The rule is simple: no scale-up without an owner, a measure and a rollback plan. Subscription costs sit in the same dashboard as cloud and labor, so no one is surprised.

Two practices keep us honest. First, a one-page summary leaders can read without context: use case, owner, weekly value, cumulative value, risk notes and next decision date. Second, a red button culture: if a tool misfires in a way that could harm a colleague or disrupt a workflow, anyone can pause its use.

Week 10

By week 10, every department has tested and refined several increments. The outcomes vary. Some teams report shorter cycles for routine tasks. Others see fewer errors in data entry or reporting. Approvals that once sat in queues for days now move faster because drafts arrive cleaner and more complete. The specifics are different, but the pattern is the same. We do not replace people. We return the time to them. Teams use that time to coach new hires, visit client sites or handle exceptions that require judgment. That shift is where the real value shows up, not just in efficiency metrics but in the quality of work and relationships across the organization.

Weeks 11 through 13

Weeks 11 through 13 are for hardening. We check vendor service levels, lock down secrets and write the runbooks the ops team deserves. Finance helps us translate time saved into dollars only when hours are reallocated. Trials that do not deliver are shut down. On day 90, leadership sees the summary, the value realized and the pipeline for the next quarter. The question shifts from if to where next.

The framework behind the story

The framework that carries this model is simple:

- Portfolio cadence. Three to five use cases per quarter. Weekly 20-minute check-ins. Stop what stalls.

- Risk controls. Classify data, audit outputs and keep a human in the loop for critical decisions.

- Funding guardrails. Stage-gate every project: foundation, trial, scale. Four-week caps on trials. No scale without an owner and a rollback plan.

- Executive-ready reporting. One-page summaries, dashboards that show weekly and cumulative value and quarterly updates tied directly to business outcomes.

This structure is not limited to any one sector. It is effective in service industries, manufacturing, healthcare, education and logistics — anywhere leaders require quicker results, managed risk and accountable reporting.

The wrap-up: What stuck, what I would change

Three lessons stay with me. First, cadence beats cleverness. Consistent progress every week matters more than a perfect plan. Second, plain guardrails build trust. Leaders sign off faster when they see the same rules applied across finance, operations, HR and sales. Third, money follows proof. Once finance can trace time saved to margin improved or errors avoided, funding is no longer a debate.

What would I change next time? I would bring HR and communications in earlier. Generative AI changes how people work long before it changes org charts. When managers know how to coach to new workflows and when employees see their input reflected in the tools, adoption accelerates without a mandate.

If you take one thing from my 90-day map, make it this: Do not chase the perfect vendor. Start with the pain your people feel every day. Agree on the rules. Show progress weekly. Once value is proven, build on the platforms already in place. The point is not to deploy AI. The point is to create compounding time across the organization. Once you do, the operating model sells itself.

This article is published as part of the Foundry Expert Contributor Network.

Want to join?